| URLs in this document have been updated. Links enclosed in {curly brackets} have been changed. If a replacement link was located, the new URL was added and the link is active; if a new site could not be identified, the broken link was removed. |

Assessing Customer Satisfaction at the NIST Research Library: Essential Tool for Future Planning

Group Leader, Research Library and Information Group

rosa.liu@nist.gov

Nancy Allmang

Materials Science and Engineering Laboratory Liaison

National Center for Neutron Research Liaison

nancy.allmang@nist.gov

National Institute of Standards and Technology

100 Bureau Drive, MS 2500

Gaithersburg, MD 20899

Abstract

This article describes a campus-wide customer satisfaction survey undertaken by the National Institute of Standards and Technology (NIST) Research Library in 2007. The methodology, survey instrument, data analysis, results, and actions taken in response to the survey are described. The outcome and recommendations will guide the library both strategically and operationally in designing a program that reflects what customers want -- in content, delivery, and services. The article also discusses lessons learned that other libraries may find helpful when planning a similar survey.

Introduction

With mounting customer expectations, explosions in technologies and content, and rising costs and declining budgets, assessment activities have become a routine part of library management. Librarians periodically examine customer satisfaction with the library's collection, services, and information preferences in order to assure that customers' changing needs are continually being met. Librarians at the NIST Research Library conducted a comprehensive customer satisfaction survey in 2001 (Silcox & Deutsch 2003b). Since that time, however, technology, content delivery, and storage changed so much that a follow-up survey was deemed necessary to make sure that the library would remain in sync with current and future customer needs.The 2001 survey measured customer satisfaction with resources and gauged the impact of earlier journal cancellations on customers' work. The 2007 survey was planned to assess usage of and satisfaction with resources and select library services, including the laboratory liaison program, the information desk, interlibrary loan, and document delivery. The survey aimed also to correlate the demographic data with the information-gathering habits of customers in order to segment their research habits by work unit and length of time worked at NIST. Furthermore, it would examine the use of iPods1, BlackBerry devices and other wireless tools; varying materials formats; and the use of collaborative technologies such as wikis to determine customer preferences.

Background

NIST is a non-regulatory federal agency within the U.S. Department of Commerce. Its mission is to promote U.S. innovation and industrial competitiveness by advancing measurement science, standards, and technology in ways that enhance economic security and improve our quality of life. The Research Library is part of the Information Services Division/Technology Services at NIST whose mission is to support and enhance the research activities of the NIST scientific and technological community through a comprehensive program of knowledge management. The library provides research support to a staff of 3,000 in both the core competencies of the NIST Laboratories (such as traditional physics, chemistry, electronics, engineering) as well as in new research priorities dictated by national agendas. Listening to customers is critical to our success; "where our customers' needs shape our future" is the tagline on the library's homepage.Literature searches in preparation for the 2001 and 2007 surveys did not turn up information specifically useful in surveying customers of this unique research library. In order to gather data relevant to its institutional needs, NIST Research Library management elected to design its own survey instrument rather than use LibQUAL+, the Association of Research Libraries' survey instrument that, "determines …users' level of satisfaction with the quality of collections, facilities, and library services" (Kemp 2001). That survey allows academic libraries to compare themselves with other libraries who administer the same survey http://www.libqual.org. Although LibQUAL+ is commonly used by academic libraries, NIST librarians decided it would not be the most useful instrument for this library with its distinct mission and clientele.

As in many academic and special libraries, NIST librarian/laboratory liaisons work closely with various portions of their customer community to provide individualized customer service (Ouimette 2006). NIST also has a Research Library Advisory Board which acts as a two-way conduit by providing input for the library, and carrying important information about the library to customers.

In addition, the Malcolm Baldrige National Quality Award, which provides useful performance excellence criteria, was established by Congress in 1987 and has been administered by NIST since that time (Baldrige National Quality Program 2008). The library has an awareness of the criteria, and over the years has strived for continuous quality improvement; customer feedback has been extremely important in guiding library decisions.

Method of Analysis

The online tool, Survey Monkey, was used to execute the survey. There were 20 questions: questions 1 and 2 were mandatory, asking for respondent's division and number of years working at NIST; the remaining 18 questions were optional. For ease of tabulation, the responses provided radio buttons or drop-down menu picks. Following each question a free field encouraged comments. It was felt that balancing the quantitative and qualitative data in this way would help to interpret what was meant by a response or allow survey analysts to drill down to issues not initially obvious (Silcox & Deutsch 2003b). Please see Appendix for survey questions.The 20 questions addressed the following areas:

- Library Resources

- Library Services

- Customer Preferences

- Impact of the library on the customer's work

In the winter of 2006, a consultant was brought in to assist with the design of the survey instrument and analysis of responses. The library staff worked closely with the consultant to ensure that survey questions would ultimately produce useful trend data. It was also important to learn whether the time had come to implement new tools such as the blog to communicate with library customers or perhaps enhance communications among customers by means of a wiki. A library team reviewed the 2001 questions, identified those to repeat or drop, and developed new ones; of particular interest to staff was a question, new in 2007, regarding impacts of the library on customers' work. To ensure maximum participation, the library promoted the survey through its Research Library Advisory Board, a library newsletter, and electronic bulletin boards.

Lab liaisons launched the survey in March 2007 by sending email messages linking customers to the web questionnaires. The survey was open to receive responses for 3 weeks. During that time we received 629 responses from a target audience of 2900, a 21.6% participation rate. Results of the survey were captured and tabulated directly by the consultant, who considered this a good response rate.

Results and discussions

Results were based on the raw data and graphs extracted from the structured part of the web questionnaire and from the free field comments that permitted participants to clarify and illustrate what they meant. Results yielded positive feedback as well as opportunities for improvements.- Library Resources. As in 2001, journals continued to be the most valuable resource for NIST scientists with an overwhelming preference for e-journals. Subject coverage was again judged satisfactory in the NIST core competence areas; lower satisfaction continued in newer NIST areas of research such as biosciences and biotechnology.

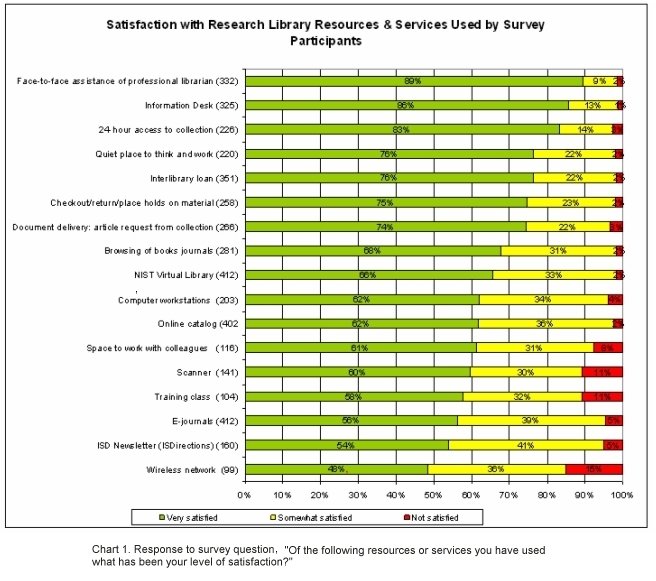

- Library Services. High satisfaction was measured for library services across the board, especially services involving face-to-face assistance with a professional librarian. See figure below.

Seventy-five percent of respondents stated that the greatest barrier to obtaining information was that the resources they needed were not available electronically; 49% stated they were too busy to obtain the information. We discovered that the majority of customers were not aware of the laboratory liaison program of customized library services; of those who knew of it, 98% were satisfied with the service.

- Customer Preferences. As expected, customers frequently used Google and other search engines to seek information needed for their work. Their satisfaction rate with this method of locating information was 91-99%. It was not apparent to customers that Google actually provided access to NIST Library Resources through Worldcat and Google Scholar. Sixty percent of customers said they used wikis, while 44% used blogs. Consulting with colleagues ranked high along with Google as resources used by staff of all work units, regardless of their length of service at NIST.

- Impact of the library on customers' work. Preventing duplication of research effort, stimulating innovative thinking, publishing in a refereed journal, presenting at a conference, and making critical decisions were of highest value to library customers. This was the first time the library had asked a question to assess its impact on customers' work. It was used in various internal reports and provided very useful data for the demonstration of the library's impact to the organization as a whole.

The customers were not shy in providing comments, answering not only the questions at hand but making observations on everything else about the library. They recommended new journals, books, and databases, although recommendations were not solicited. The library basked in the survey's positive feedback ("Excellent staff. The best department in the entire NIST.") and noted opportunities for improvement ("Add a real Google search of your resources").

Library's Action Items

Based on the results and customer comments, the library incorporated the results of the survey into immediate operational improvements and action items for its FY08 Strategic Plan and Collection Development Policy. Below is a sampling of improvements made or planned as a result of customer response to the survey.- Operational. All recommendations for resources were extracted, priced, and put on a "wish list." As funds become available, those of highest priority will be acquired. The library recently acquired two important databases that the survey determined were critical to the scientists' needs.

- Strategic. The library incorporated into its 2008 strategic plan the aim to increase electronic resources, particularly e-journals, in new NIST research priority areas.

- Strategic. The library also included in its strategic plan a goal to add electronic journal archives to classic titles when one-time funding is available.

- Strategic. The 2008 strategic plan also expands and steps up marketing of the laboratory liaison program, emphasizing customized liaison services, outreach, and collaboration with the assigned labs in order to position the library as a research partner with it scientists.

- Operational. Plans for 2008 include making the library web site (http://nvl.nist.gov) easier to navigate by implementing links to difficult-to find information, and making it more "Googlelike."

- Operational. Librarians will investigate putting web tutorials at point-of-need on the library web site and utilizing demonstrations given by visiting vendors to educate customers.

- Strategic. Incorporated into the 2008 strategic plan is the aim to investigate ways to educate customers about newer technologies – web 2.0 and beyond, and to investigate using these technologies in the future for younger scientists joining NIST. The library is currently implementing a video podcast to market its library liaison program and has introduced an audiobooks-on-iPod program that is very popular with future managers who are interested in keeping up with their leadership reading.

- Operational. Librarians are revamping communication tools with customers and are in the process of converting the library newsletter from a paper/PDF version to an interactive blog with RSS feeds.

Lessons Learned

Work with a Consultant. Working with the right consultant enhanced the survey process. The participation of a third party lent objectivity, especially in the analysis and interpretation of results, as library staff found they were sometimes too close to the subjects they were surveying. Meeting and discussing each individual question with knowledgeable advisors from outside the library improved the clarity of questions whose meaning seemed obvious. Conducting a survey of this type was extremely time consuming, particularly the analysis; employing a consultant saved a great deal of time. Clearly documenting in the Statement of Work the desired format, in addition to the content, would have been helpful to all concerned. For example, along with "Final Report with analysis and recommendations," we should have included, "raw data in Excel spreadsheet and soft copy of questions in Survey Monkey format." It is also important to spell out dates for deliverables. Allowing time for due diligence in selecting the consultants, and having standard questions ready to check their references is also important.Dry run the questionnaire. Test the questionnaire with a small group to make sure questions are clear. Testing questions with our library advisory board and revising those that were ambiguous improved the questions immensely. Even afterwards, there remained ambiguous verbiage we did not catch. For example, defining "satisfaction" in "Please rate your satisfaction" would have been helpful. In discussing electronic journals, did "dissatisfied" or "satisfied" refer to a customer's satisfaction with the collection of e-journals, or satisfaction with the smoothness of access to the e-journals? Unless this meaning is clear, it will not be obvious how to make "satisfied" customers "very satisfied."

Craft an introduction. Overstating the length of time it will take to complete the survey can be counterproductive. We heard that potential respondents were putting the survey aside after the first screen, where they read a welcome message estimating the survey would take 20-30 minutes to complete. Changing this to, "Thank you for participating in this 20-question survey," produced more completions.

Include a question on impact. In these times of inflationary increases, tight budgets, downsizing, and trends of shutting down physical libraries, be sure to include a question which will demonstrate the library's impact on the larger organization.

Trend data. Asking questions that led to useful results and demonstrated trends provided additional rich data. Without a previous survey, a library might use other baseline data. Asking a question without a way to use the resulting response is not beneficial.

Follow up surveys. Subsequent surveys can be shorter and more effective if specifically targeted to and rotated among specific groups or work units with varying needs. A shorter, targeted survey may result in greater participation, and its creation and analysis will certainly be a less labor-intense process for those conducting it. Currently the library is assessing customer satisfaction with the collection in new subject areas; we plan to ask two questions of a targeted customer base.

Identify potential participants. Identifying in detail, well ahead of time, all groups who were to receive the survey turned out to be more difficult than we expected. Adding groups to a web survey once begun turned out to be extremely difficult and time-consuming.

Don't be discouraged. Interestingly, in spite of a concentrated effort to build up our library's biosciences collection after results of the 2001 survey demonstrated lowest satisfaction in this area, trend data showed that in 2007 the satisfaction level in the broad area of the biosciences had dropped. Building a comprehensive collection in a new area is a long process. In this electronic era, new resources may spring up faster than funds to purchase them.

Take action on the survey results. Taking suggestions for improvements seriously and doing something with the information that the customers provided was extremely important (Silcox & Deutsch 2003a). Quick action remedied situations in the category of "low hanging fruits."

Welcome negative results. Opportunities for improvement were our most useful survey results. It was only after the need was clear that true improvements could actually be made.

Conclusion

The survey results overwhelmingly supported the presence of the physical library and the library staff, and demonstrated their value to the parent institution. It provided valuable evidence-based data to share with customers and stakeholders. The survey took a year to accomplish from planning through final report and significant staff time to execute with the help of a consultant. As a tool for future planning, and to understand our library's customer base, the survey was absolutely invaluable and well worth the time spent.Notes

1 The identification of any commercial product or trade name does not imply endorsement or recommendation by the National Institute of Standards and Technology.Appendix

2007 Customer Satisfaction Survey Questions, NIST Research Library

Appendix

References

Baldrige National Quality Program. 2008. [Online.] Available: {https://www.nist.gov/baldrige} [June 22, 2008].

Kemp, J.H. 2001. Using the LibQUAL+ Survey to assess user perceptions of collections and service quality. Collection Management 26(4):1-14.

NIST Virtual Library. 2008. [Online.] Available: http://nvl.nist.gov/ [June 23, 2008].

Ouimette, Mylene. 2006. Innovative library liaison assessment activities: supporting the scientist's need to evaluate publishing strategies. Issues in Science and Technology Librarianship (46) Spring 2006. [Online.] Available: http://www.istl.org/06-spring/index.html [June 30, 2008].

Silcox, B.P. and Deutsch, P. 2003a. From data to outcomes: assessment activities at the NIST Research Library. Information Outlook 7(10):24-25, 27-31.

Silcox, B.P. and Deutsch, P. 2003b. The customer speaks: assessing the user's view. Information Outlook, 7(5):36-41.

SurveyMonkey. 2008. [Online.] Available: http://www.surveymonkey.com/ [June 22, 2008].

| Previous | Contents | Next |