| URLs in this document have been updated. Links enclosed in {curly brackets} have been changed. If a replacement link was located, the new URL was added and the link is active; if a new site could not be identified, the broken link was removed. |

Using ISI Web of Science to Compare Top-Ranked Journals to the Citation Habits of a "Real World" Academic Department

Engineering Library

Cornell University

Ithaca, New York

jpc27@cornell.edu

Abstract

Quantitative measurements can be used to yield lists of top journals for individual fields. However, these lists represent assessments of the entire "universe" of citation. A much more involved process is needed if the goal is to develop a nuanced picture of what a specific group of authors, such as an academic department, is citing. This article presents a method for using ISI Web of Science in conjunction with spreadsheet management to compare lists of top journals from ISI and Eigenfactor with the journal titles that a list of authors are citing in their own publications. The resulting data can be used in collection development, departmental contacts, and in assessing the research interests of an academic department.

Introduction

Over the past decade, a body of literature has accumulated supporting the consensus that most major quantitative measurements of journal quality track together (Elkins et al. 2010). That is, Eigenfactor, Journal Impact Factor, total citation count, and similar measurements yield similar relative rankings of individual journals.

Quantitative measurements of journal impact are based upon comparison with the entire "universe" of citation or, at least, with the large subset of literature citation indexed by ISI Web of Knowledge. These quantitative measurements are increasingly used by academic departments in hiring (Smeyers & Burbules 2011) and by academic libraries in purchasing or cancellation decisions (Nisonger 2004).

The specific applicability of these metrics to "real-world" departments and libraries, whose faculty or patrons will have a specific subset of interests and citation habits, may be questionable. This is especially true for those libraries whose goal is to coordinate collection development with the needs of the departments at their institutions. Eigenfactor, for example, might become a more informative tool for collection development if one considered not only a journal's Eigenfactor ranking, but also examined the journal's actual use by the faculty of a department in a relevant field. Put another way, how representative are the citing habits of the specific group of people that make up an academic department when compared to all such people conducting similar research? How then can an academic librarian make use of that information?

A significant amount of research has already been done in comparing certain quantitative measures, particularly ISI's Journal Impact Factor, to local demand for or use of certain journals. Such investigations have yielded a variety of results. Pan found no relationship between journal use in her library and the supposed quality of those journals as measured by times cited globally or by Science Citation impact factor (Pan 1978). Although Pan wrote before the advent of ubiquitous electronic access to journals, 16 years later, Cooper and McGregor (1994) still found little correlation between local citation of journals and their assessed impact factor. By analyzing requests for photocopied articles in a corporate biotechnology lab, they found that, outside of a small number of multidisciplinary prestige journals (i.e., Science, Nature, PNAS, etc.), ISI impact factor had little predictive utility in measuring journal use.

Schmidt and Davis (1994), examining citation patterns by faculty at an academic library, found a small correlation between journal use measured by citing and those journals' impact rankings. Stankus and Rice (1982) found a much larger correlation between local and global patterns of citation. Examining journals in several disciplinary groupings, they found a strong correlation between local usage of journals and the impact factor of those same journals, with several caveats having to do with the precise character of the journals examined and certain minimum use that would exclude idiosyncratic researchers. But again, their study predated the current research environment in which virtually all reading and citation are done electronically.

When one looks closer to the present day, many studies have cited this prior work, but few seem to have directly compared quantitative measures of journal quality with local citation. Blecic (1999) compared multiple means of assessing journal use within a library but did not extend the comparison to journal impact factors. Jacobs et al. (2000) used local citation analysis as a means of measuring the utility of full-text research databases. Although they cited Stankus and Pan, they did not compare local citation patterns to impact factors.

While there is clearly space for more current research in this area, a full resolution of these issues is beyond the scope of this paper. The author hopes to present a relatively simple method by which librarians who work with academic departments can determine to what degree they should rely on the top journal lists generated by quantitative measures of journal impact. This information has great potential utility for collection development. Or it can simply provide a librarian with a way to undertake a simple analysis of what a group of scholars at their institution are citing in their own research.

Methods

The raw data necessary for a comparison of a group of faculty to all authors in a discipline is somewhat problematic to obtain. ISI Web of Knowledge and its component database, ISI Web of Science, make it relatively simple to search "forward" to find articles--and thus journals--that cite the works of a specific author or even multiple authors. But the reverse is far more difficult: Finding the journals cited by all articles by a specific author or multiple authors is not a straightforward function of the ISI system (ISI 2012).

To retrieve this information, it is necessary to determine a set of authors. It might be that a list of full faculty in a department may suffice, but librarians may also wish to include adjunct faculty, lecturers, technicians, post-doctoral fellows, or graduate students. This information can usually be obtained from departmental web sites or university directories. This list of authors should then be collected into a single search string with first initials wild-carded.

For example, below is a Web of Science author search string representing all regular faculty with appointments to Cornell University's Department of Earth and Atmospheric Sciences (Faculty of Earth and Atmospheric Sciences 2011):

allmendinger r* or allmon w* or andronicos c* or barazangi m* or brown l* or cathles l* or chen g* or cisne j* or colucci s* or degaetano a* or derry l* or green c* or hysell d* or jordan t* or kay r* or kay s* or lohman r* or mahowald n* or phipps-morgan j* or pritchard m * or riha s* or white w* or wilks d* or wysocki m*

Note that this example is practical because the list is of manageable size. In the case of much larger institutes or research departments, it may be necessary to reformulate the search query to retrieve hits by address or name of the institution, although Web of Science does not always use high-quality name authority control on such terms as university and department names.

Once a query like this is formulated, the following series of steps in ISI Web of Science generates first a list of publications, then of cited journal titles:

- Limit date range as desired.

- Perform search.

- Facet by institution (to exclude authors' citations while they were employed by other institutions and to exclude authors with similar names not employed by the institution in question).

- Select all records and export to a Marked List.

- Within the Marked List, deselect all fields except for Cited References.

- Export via "Save to Tab-Delimited (Win)."

- Save locally, rather than directly opening the resulting file.

- Open with a spreadsheet program (e.g., Microsoft Excel), choosing whatever options are necessary to tab-separate entries into separate cells.

- For the purposes of the example given below, citations to journals outside the years 2004 and 2005 were excluded in order to render the comparison with both ISI categories and Eigenfactor categories valid (the latter covers only citations to articles published in 2004 and 2005). The precise date-range can be as broad or narrow as desired.

- Delete Column A: It will consist of various field delimiters and is not relevant data.

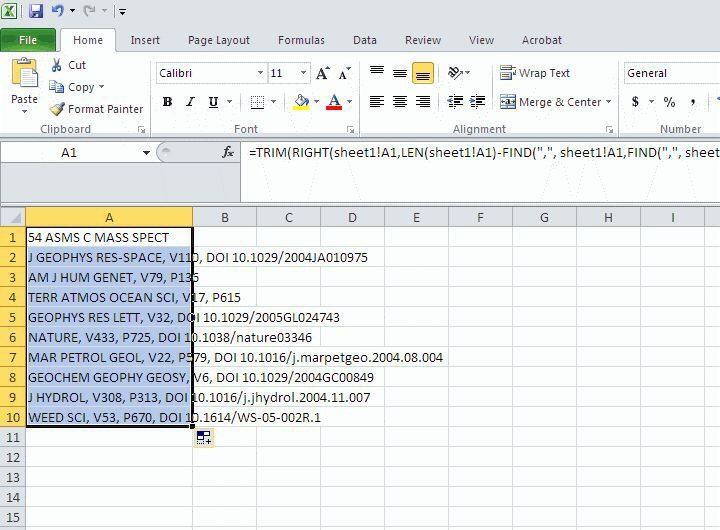

- Truncate the citations using a formula, such that the first information to appear is, in most cases, the journal title. For example, if using Microsoft Excel with ISI Web of Science citations, it is usually possible to delete all content to the left of the journal title by creating a new tab and then filling in the cells by using this formula:

=TRIM(RIGHT(SHEET1!A1,LEN(SHEET1!A1)-FIND(",", SHEET1!A1,FIND(",", SHEET1!A1)+1))). See the appendix for screen shot examples of this process. - Arrange all data into a single column.

- Sort data alphabetically.

- Note most frequently occurring journals and compile totals.

Results

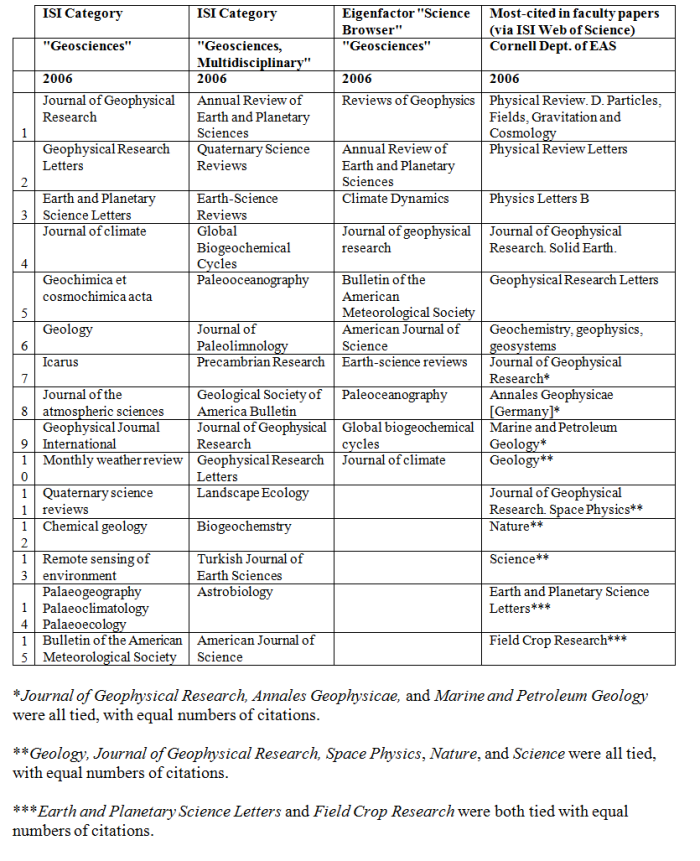

By putting this list of authors through the aforementioned process, it is possible to generate something that approximates an apples-to-apples comparison with top journal lists offered by both ISI Categories and Eigenfactor categories (ISI 2012; Eigenfactor 2012).

The results can then be placed in parallel columns of top-ranked journals, comparing the journal titles cited to comparable categories within ISI and/or Eigenfactor.

Discussion

- 2006 was chosen as the common year for all four columns since it was the year for which the Eigenfactor Science Browser's listing was compiled and it was thought advantageous to compare like with like.

- The Eigenfactor Science Browser cluster "Geosciences" only includes 10 journal titles. Nevertheless, the author chose 15 for the other columns for additional comparison.

- Two different ISI Categories seemed potential disciplinary approximations for the type of research done by Cornell's Department of Earth and Atmospheric Sciences: "Geosciences" and "Geosciences, Multidisciplinary." Both categories were included.

- Eigenfactor can include citations to journals outside of ISI Web of Science, the basis of the three other columns/categories. All journal titles included in the top 10 Geoscience journals category in Eigenfactor are indexed by ISI.

- Web of Science indexes only journal articles, which excludes an author's books, conference proceedings, and other publications, as well as the citations embedded within them. Depending on the field, these can be important categories of literature.

- Since column 4--the specific findings of this study--are based upon total citation counts to individual journal titles and not upon formulae that seek to balance total citations with total citable items within a journal, it is necessary to acknowledge that the picture may be skewed by one or more researchers who repeatedly cite a particular journal.

Despite these imprecisions, particularly points 4, 5, and 6, the author believes this comparison is fundamentally valid. ISI categories and Eigenfactor both present themselves as authoritative--and indeed, quantitative--listings of important journals by discipline. Within the context of a librarian seeking to learn something about a department's citation habits, this remains a worthwhile exercise. Use of this method can reveal what journals an author or group of authors cite in their own articles. This can reveal the research priorities of a department or research center, trends in the use of specific journals over time, and congruence of the library's collections with the citation habits of faculty.

Conclusions

This sample case pertains only to a specific university department; the findings may not be easily generalized. In this study, physics journals and major multidisciplinary science journals such as Science and Nature were more highly cited than many geology titles. The broader principle demonstrated is clear: the citation patterns of an academic department can differ markedly from lists of quantitatively ranked top journals, even in a corresponding field.

This process yields a significantly less generalized result than do the title lists put forward by ISI, Eigenfactor, or even subject bibliographies. The results of a department-specific journal search have several potential uses for librarians. For collection development, it can inform decisions about which journals to purchase, retain, or cancel. It might be interesting to investigate further if faculty members are heavily citing a resource to which the library does not subscribe. If the library is seeking collaboration or cost-sharing, either externally or internally, a list generated by this process can provide a basis for the design of shared collections.

Faculty certainly read more journals than they either publish in or cite in their own research. The level of such activity can generally be estimated through use statistics for online resources or through surveys of the faculty. But such statistics reveal only that someone on campus clicked on a particular link and looked at a page. What the method described in this paper reveals is some glimpse into what faculty are truly reading, to the extent that they find it worthwhile to cite such research in their own publications, although this makes an implicit assumption that faculty actually read what they cite: In informal discussions of this paper with faculty, a few sheepishly admitted that they did not always do so!

The findings can also serve as a basis for better understanding a department: What are its areas of research emphasis? What are the interests and reading habits of its faculty? A librarian newly assigned to be a liaison to a department of which he or she has little firsthand or even disciplinary knowledge might take advantage of this system to gain a quick understanding of the research interests of the faculty.

The practical upshot of this research is unlikely to come as a surprise to most thoughtful librarians, particularly those with experience collaborating with academic departments. Librarians would do well to qualify any reliance on quantitative journal rankings with inquiries into the specific subset of interests and publication habits of the faculty they serve. It may be that nothing short of a personal interview will truly establish what an individual researcher in a specific discipline uses and values in research literature. But the process described here provides a happy medium: a way to begin an inquiry into the citation and research habits of the faculty one works with and to potentially refine the collections of the library accordingly.

References

Blecic, D.D. 1999. Measurements of journal use: an analysis of the correlations between 3 methods. Bulletin of the Medical Library Association 87(1): 20-25.

Cooper, M. and McGregor, G. 1994, Using article photocopy data in bibliographic models for journal collection management. Library Quarterly 64 (10): 386-413.

Eigenfactor. 2012. Mapping science. Science Browser. [Internet.] [Cited 2012 May 11]. Available from: http://eigenfactor.org/map/index.php

Elkins, M., Maher, C., Herbert, R., Mosley, A., Sherrington, C. 2010. Correlations between journal impact factor and 3 other journal citation metrics. Scientometrics 85(1) (10): 81-93.

Faculty of Earth and Atmospheric Sciences. 2011. Faculty for Earth and Atmospheric Sciences. [Internet]. [Cited 2012 February 7]. Available from: {http://www.eas.cornell.edu/eas/people/faculty.cfm}

ISI Web of Knowledge. 2012. Web of Knowledge. [Internet]. [Cited 2012 February 7]. Available from: http://www.webofknowledge.com/

Jacobs, N., Woodfield, J. and Morris, A. 2000. Using local citation data to relate the use of journal articles by academic researchers to the coverage of full-text document access systems. Journal of Documentation 56(5): 563-583.

Nisonger, T.E. 2004. The benefits and drawbacks of impact factor for journal collection management in libraries. The Serials Librarian 47(1-2): 57-75.

Pan, E. 1978. Journal citation as a predictor of journal usage in libraries. Collection Management 2 (Spring): 29-38.

Schmidt, D., and Davis, E.B. 1994. Biology journal use at an academic library: a comparison of use studies. Serials Review 20(2): 45

Smeyers, P., and Burbules, N.C. 2011. How to improve your impact factor: questioning the quantification of academic quality. Journal of Philosophy of Education 45(1): 1-17.

Stankus, T. and Rice, B. 1982. Handle with care: use and citation data for science journal management. Collection Management 4 (Spring/Summer): 95-110.

Appendix

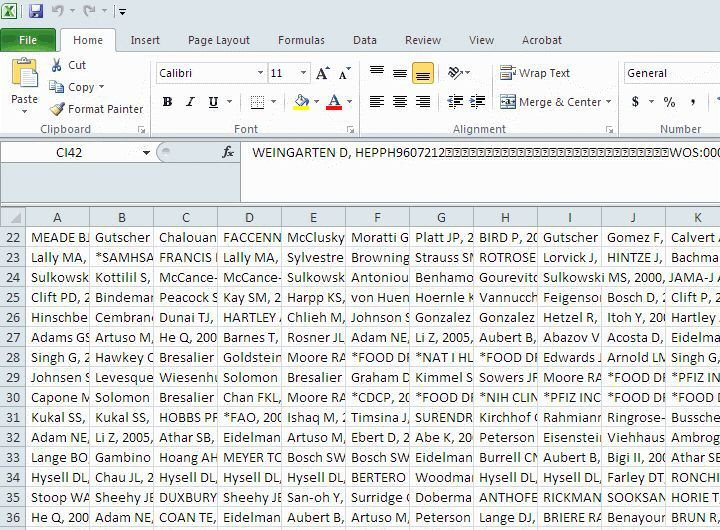

Figure 1: The raw data exported into Excel, with the original column A deleted. Each cell is a single citation. The difficulty is that for most entries, Author and Date of publication come before journal title.

Figure 2: Open a new tab ("Sheet2" in most cases) and place the formula =TRIM(RIGHT(sheet1!A1,LEN(sheet1!A1)-FIND(",", sheet1!A1,FIND(",", sheet1!A1)+1))) into the top-left cell (Cell A1). Note that this formula assumes your first sheet was Sheet1. If not, make appropriate substitutions within the formula above.

Figure 3: Mouse over the lower-right corner of that cell until the 'plus'-sign appears and then drag it out and down. This will populate the cells in this sheet with the contents of the corresponding cells in the previous sheet, except that everything to the left of the second comma will be removed. This means that the cells will then begin with the journal title and can later be sorted to allow you to count up total cites for each journal title.

| Previous | Contents | Next |