| URLs in this document have been updated. Links enclosed in {curly brackets} have been changed. If a replacement link was located, the new URL was added and the link is active; if a new site could not be identified, the broken link was removed. |

Using Minute Papers to Determine Student Cognitive Development Levels

Lia Vella

Instruction & Research Services Librarian

Arthur Lakes Library

Colorado School of Mines

Golden, Colorado

lvella@mines.edu

Abstract

Can anonymous written feedback collected during classroom assessment activities be used to assess students' cognitive development levels? After library instruction in a first-year engineering design class, students submitted minute papers that included answers to "what they are left wondering." Responses were coded into low, medium and high categories correlated to cognitive stages following a codebook that was developed based largely on Perry's model and on two semesters' worth of prior student feedback. Preliminary analysis suggests that subjects exhibit characteristics in keeping with the range of cognitive levels identified by previous research at this institution, but that they tend to be weighted toward the lower end of the spectrum. While this study does not reveal cognitive development levels as reliably as the work of previous researchers, it does propose an easy method for identifying general cognitive development levels of student cohorts and offers recommendations for using this knowledge to improve instructional effectiveness.

Introduction

Upon introducing classroom assessment activities into our one-shot information literacy sessions with first-year students, librarians at this institution noticed that some responses seemed to indicate that students were operating at lower cognitive development levels than we had assumed. In particular, there was a marked dualistic bent to many questions. After a session on evaluating sources, for example, involving an activity in which bulleted lists of criteria were used by student teams to rate sample information sources, we saw many questions asking "how low" a rating could be before a source could not be used. Other students wondered if the Library keeps a list of all the authoritative web sites that they could use for their project, or if they would be able to find the "right" article for their report. On the other hand, we had questions that were more complex, about how to evaluate two articles that both seem reputable but that contain conflicting information, or asking whether the peer review system ever fails. Knowing where the average first-year student at our institution would fall on a standard scale of cognitive development schema could help librarians, as auxiliary teaching faculty, to reach the primary student population more effectively by designing information literacy instruction that targets them where they are in terms of intellectual development.

The literature on the use of cognitive development theory in educational research indicates that measuring students' cognitive positions can be a lengthy and intensive process. Although a questionnaire has been developed to test epistemological beliefs (Schommer 1994), which are similar to cognitive development levels, this study seeks to test the use of an even quicker and more unobtrusive method for gaining insight into student cognitive levels. This method would offer a librarian-instructor a "snapshot" view of students' cognitive development status, so that programmatic instruction can reach the intended population more effectively.

Background

The study took place at a public institution that educates undergraduate, masters, and Ph.D.-level students in STEM fields. Ten faculty librarians serve the 4,400 undergraduates and 1,300 graduate students, as well as nearly 300 faculty and other members of the university and community. The school has no information literacy mandate, although a brief document describing the desired characteristics of a graduate from the institution specifies that students will, among other things, exhibit skills in the "retrieval, interpretation, and development of technical information by various means" (Colorado School of Mines 2014). This provides a rationale to teach many of the concepts identified in the ACRL Information Literacy Standards (2000), but there has never been a for-credit course on information literacy, or an information literacy competency test for students. Two required first-year courses are the primary venue for information literacy instruction: "Nature & Human Values" (NHV), a required writing-intensive first-year class covering ethics and the humanities; and EPICS 1 (Engineering Practices Introductory Course Sequence, part 1), which emphasizes open-ended problem-solving and design principles within a client-based, real-world project setting. Upper division classes only receive library instruction in discipline-specific information literacy rarely, as requested by individual faculty members.

Information literacy instruction within the two first-year courses is relatively standardized in the sense that, while the EPICS 1 schedule and syllabus is the same for all sections and every student receives the same information literacy session during one week of the semester, the individual sections of NHV vary considerably in scheduling and content. Not all NHV instructors request librarian instruction; of those who do, many prefer that the instruction occur within a one-shot session, while others choose a graduated "just-in-time" series of mini-sessions; occasionally, an instructor proposes a one-shot session in addition to another shorter session. The NHV instructors who do not involve the library often instruct the students themselves on research skills at the library or elsewhere, but the details of this instruction are unclear.

It is more or less impossible to introduce all students to a full suite of information literacy concepts. Also, because library instruction is heavily weighted toward the first year or two, many students do not remember or continue to practice good information literacy habits, as is suggested by responses to recent surveys of graduating seniors (Schneider 2012; Schneider 2013; Schneider 2014). Therefore, it is crucial that the limited instruction they do receive be targeted to an appropriate level of intellectual development so that it can be more relevant and more "sticky."

Cognitive Development in the Educational Setting: The Literature

Perry's (1970) work on intellectual development in college-aged individuals is the bedrock for much cognitive development research in the educational setting. Perry identified nine "positions" of development spanning the lower "dualistic" stages, through "multiplism," and up to the higher "relativistic" positions (see Table 1 for more on Perry's positions). Perry argued that because of the difficulty involved in reaching the higher levels of development, students were likely to retreat from the development process or become disengaged unless faculty provided appropriate encouragement, recognition, and confirmation, especially through the formation of community through which students would feel a sense of belonging. King & Kitchener (1994) built on Perry's work in part to develop a model of reflective judgment, which has been tested via longitudinal and cross-sectional studies using various cohorts that include undergraduate students, graduate students, and adults with and without a college background. Like Perry, they advocate for changes in the higher educational system, such as the inclusion of the affective aspects of learning in order to improve students' higher-order thinking skills.

Perry's model has also been critiqued and extended: in Women's Ways of Knowing, Belenky, et al. (1986), use Perry's model to understand how intellectual development patterns of women might differ from those of men. Their longitudinal study of 135 adult females included interview questions based on Perry's model in order to position the subjects on an established "map." Belenky and colleagues' work resulted in a model consisting of five stages of intellectual development specific to women: Silence, Received Knowledge, Subjective knowledge, Procedural Knowledge, and Constructed Knowledge.

Table 1 Perry's Cognitive Development Model

|

Characteristic Beliefs | Correlation to Codebook categories in this study |

|---|---|---|

Positions 1-2 Simple Dualism |

The world is black and white. There is a right answer to every question. Authorities know the right answer, and they are supposed to teach the student what the answer is. |

Low |

Positions 3 Complex Dualism |

Authorities may not agree about the correct answer, or it may be that no one knows the correct answer, but a "right answer" does exist. Therefore, "everyone is entitled to their own opinion." |

Medium |

Position 4 Late Multiplicity |

Ideas can be better or worse rather than right or wrong. Students may think critically because they perceive that is "what the professors want." |

High |

Positions 5-6 Relativism |

Solutions to problems can be shades of gray rather than black and white; philosophical or speculative questions can be asked and understood |

High |

Positions 7-9 Commitment in Relativism |

Commitments to answers may be made based on one's own experiences and choices; understanding that commitment implies continued pursuit of knowledge despite tensions around unknowns or conflicts |

NA |

Based on Perry 1970; Jackson 2008; Rapaport 2013

Schommer (1994), on the other hand, noted that the linear nature of the models proposed by Perry and others are limiting, and argued that cognitive growth may not be a stepwise process, but a cyclical one in which a subject may be at a more advanced stage in one knowledge area and less so in another. Her formulation proposes four categories, in which someone may be ranked as more or less evolved in the following four categories: structure or knowledge (simple or complex); certainty of knowledge (absolute or evolving); control of knowledge (fixed or incrementally changing); and speed of knowledge (quick or gradual). Schommer's model is considerably different from that of Perry and others who followed, and it was not used in this research, but the model is relevant to this discussion inasmuch as the studies comparing cognitive development across knowledge domains have used it.

Subsequently, educators have applied these models of intellectual development in various ways. There are several examples specific to engineering education: Michael Pavelich, et al. (1995; 1996), conducted extensive studies of engineering students at this institution to identify cognitive development stages and the effects of open-ended problem solving course sequences. They determined that students generally advanced one to two "positions" during their time at the school after participating in the problem-solving courses1, but that this still left them at too low of a level for industry. Pavelich and others also reported on subsequent efforts to develop instruments that would allow for more convenient measurement of student intellectual development levels (e.g., Olds et al. 2000). Marra, Palmer, and Litzinger (2000) also used the Perry model to evaluate engineering students at Penn State University. In this case, one group of students took an open-ended engineering design course while others did not; the group that took the course had a higher average Perry position afterward than did the group that did not take the course.

Do engineering students develop in ways that differ from students in other disciplines? According to the findings of Schommer & Walker (1995), epistemological development is domain-independent; in other words, test subjects' beliefs about knowledge from one discipline to the next were largely the same, with the exception that beliefs about "certainty of knowledge" were different from the domain of mathematics to that of social sciences. The authors suggest that this difference exists because of the nature of knowledge within these two domains; whereas knowledge in mathematics is perceived to be "certain" by nature, this is less likely to be the case in the social sciences.

Several authors have applied cognitive development models to information literacy instruction. Gatten (2004) considers the theories of Perry as well as Chickering & Reisser's "vector model" of psychosocial development and Kuhlthau's theory on the affective aspects of the information seeking process. He maps these three models to the ACRL Information Literacy Standards and discusses how students' positions within the developmental models might influence their ability to master the Information Literacy concepts contained within the ACRL Standards. Finally, he offers suggestions on how to teach information literacy concepts effectively to students at various levels of development, and recommends that librarians embed themselves in programs that allow them to interact with students throughout the course of their college years.

Jackson (2008) maps positions/stages in the models of Perry and King & Kitchener to the 2000 information literacy standards established by ACRL; she makes recommendations for instruction librarians regarding which aspects of information literacy are best taught at various levels of students' development. Fosmire (2013) describes the application of these models and related suggestions to teaching undergraduate science and engineering students.

Despite the presence of literature relating cognitive development theory to information literacy instruction, no one has reported an attempt to measure students' cognitive levels in this context. Perry, King & Kitchener, and Belenky, et al., used extended interview sessions over a period of time (years, in some cases), as did other researchers who used these models (viz. Marra, et al., and Pavelich, et al.). Indeed, this kind of measurement is considered to be difficult, with "no agreed-upon technique for assessing the Perry positions. … Perry himself preferred an approach that is built around an open and leisurely interview" (Belenky et al. 1986: 10). Following from the assumption that most instruction librarians do not have the time to conduct "leisurely interviews," this study explores the possibility of gaining insight into students' cognitive levels using a quick classroom assessment exercise that can serve multiple purposes.

Methods

The "minute paper" assessment technique was established as a regular part of our lower-division library instruction sessions in January of 2014. At the end of their session on Evaluating Sources, EPICS 1 students were asked to respond to these questions: "What is the most important thing you learned in this session?" and "What are you left wondering?" The student responses were collected and their questions answered in a written document that was delivered to the classroom instructor for distribution to all students. Similarly, students in NHV classes completed minute papers responding to these prompts: "What is your biggest question about library research?" and "Have you taken, or are you currently taking, EPICS 1?" The assumption was that the first question would prompt the same kind of response as the "left wondering" question the EPICS students answered; the second question was simply used to determine how many students had already experienced the library instruction session on source evaluation.

Student feedback throughout 2014 revealed a range of cognitive levels as indicated by the type of questions asked and the assumptions they implied; the questions indicative of the lower end of the spectrum were especially noticeable. Using data from the Spring and Fall 2014 minute papers, a Codebook was developed for use in analyzing the data collected from the sample population for this study (see Appendix 1). To create the codebook, all of the minute papers (on 4"x6" index cards) collected from the Spring and Fall 2014 EPICS students and Fall 2014 NHV students were gathered together and sorted into a continuum of "higher" and "lower" piles based on the author's understanding of Perry's model and guided in particular by the tables in Jackson (2008) and Rapaport (2013). From there, the cards were sorted into fewer piles, until three piles remained, each containing responses that were clearly indicative of the representative traits for Low, Medium, and High (see Table 1 and Appendix 1 for specifics). A group of the most representative responses addressing various topics were selected from each pile and recorded in one document per level. From these three documents, the most useful and indicative responses for each of the three levels were chosen and copied into the Codebook document.

The Codebook consists of three basic levels:

- Low (similar to "simple dualism" in Perry's model): responses indicate a belief in black and white answers and authorities who are always correct; that there must be a "right" answer or information source for every problem or assignment and a correct way to search for this; the librarian/instructor's job is to show students how to do this. Vocabulary includes terms such as "exact," "correct," "cut and dry," "always". Examples of responses at this level:

- Where exactly am I going to be able to find exact resources?

- I wonder if it is better to always use an article instead of a web site

- Is there a cut and dry way to tell if a source is scholarly?

- Medium (similar to "complex dualism"): responses ask questions or make statements indicating an acceptance of disagreement between authorities, although they still indicate a belief in correct answers and want to know which answers are to be believed. Vocabulary may include terms such as "trust," "reputability, and "conflicting." Examples of responses include:

- What is the most efficient way to find all of these scholarly articles?

- I am still worried about conflicting information and which to trust more

- High (similar to "Late Multiplicity or "Early Relativism"): responses indicate the ability to see solutions to problems in shades of gray rather than black/white or "good/bad" terms. They are more likely to ask philosophical or speculative questions. Vocabulary may include terms such as "determine," "differentiate," or "judge." Some examples:

- How much time will I need to actually spend researching?

- How can I know who sponsors the article?

- How many websites have I used in research that weren't actually very reputable?

Categories correlating to the higher Perry positions are not included because the sample responses used to develop the codebook did not indicate that any members of the student population are at this level. This decision is also justified by the research literature, which indicates that students are unlikely to demonstrate advanced cognitive development positions while in college (Perry 1970; King and Kitchener 1994; Pavelich and Moore 1996; Marra et al. 2000).

At the beginning of the Spring 2015 semester, IRB exemption was requested from and granted by the campus Human Subjects Research Committee. The experimental population (n=463) consisted of the Spring semester EPICS 1 students. All of the 21 class sections (~25 students each) attended a 50-minute instruction session on Evaluating Sources at the library; this was an interactive lesson that asked small groups of students to evaluate several pieces of information and rank them on a 1-5 scale for Scholarliness and Authoritativeness using checklists developed by library faculty. Each session was led by one of six different librarians, but all followed the same lesson plan. At the end of each session, the students received a paper form with an informed consent statement, two check-boxes to indicate age status (under 18 or 18 or older), and two feedback questions: "What is the most important thing you learned in this session" and "What are you left wondering?" (see Appendix 2). Students were directed to respond anonymously to the two questions, check the appropriate box, and drop the completed form into a box on their way out of the classroom space. They were informed that they would receive a written response to their "left wondering" question through their instructor, and that if they did not want their response to be included in the study, they should not check either box.

Response forms were collected, counted, separated into valid and non-valid based on whether the "over-18" box was checked, and the valid responses were categorized based on the "What were you left wondering?" responses into low, medium, and high using the Codebook. Responses that could not be easily coded into one of the three categories were set aside and coded later using careful comparison with the Codebook description and (where applicable) with previously coded responses. After coding began, it was determined that a fourth category was warranted for procedural questions such as "how do I check out a library book?" or "how do I use the campus VPN?" Hence, the Procedural category was created and all previously coded responses were reviewed and re-coded where relevant.

Data was recorded using MS Excel software. In addition to calculating numbers and percentages of responses by category, analysis also included comparison of results by section to identify any anomalies that could be ascribed to differences in librarian teaching style or content; none were found.

Data were further analyzed for topical distribution and possible correlation between cognitive development level and type of question posed. The No Answer responses, which could not be analyzed for cognitive development, were left out of this stage of analysis.

The topics were determined using categories that were established for use in sorting minute-paper feedback from previous semesters. The 11 established categories (in addition to "No Question"), listed alphabetically, are:

- Access: simply an understanding of the process of searching databases, but express a need for help in getting oriented to the materials provided through the Library subscriptions or through ILL. These questions may ask about whether we have access to specific databases, or for a list of available databases. They may ask about getting full-text to a specific resource, or about getting full-text of an article we don't have access to through our subscriptions.

- Citing: when to cite, how to cite, what style to use

- Evaluating Sources: clarification between scholarly and authoritative and credible; questions about reliability or about how high on the "rankings" scale a source must be in order to be used.

- Format: questions about using digital vs. print, or book vs. article

- Getting Help: when and how to contact a librarian or other help; librarian qualifications and the type of help they can provide

- Miscellaneous: any question that cannot be sorted into one of the other categories

- Off-campus use: mostly these questions are related to the campus VPN; some pertain to post-graduation access

- Production of Information: questions about the peer-review process, the cost of library materials or subscriptions; how search engines work or database information architecture

- Research Process: where or how to get started with research; how long it should take, the best way to use an information source

- Search: narrowing or broadening searches; how to come up with keywords when unfamiliar with a subject, how to deal with large numbers of results

- Structure/Navigation: questions about the physical library—how to find certain materials, equipment, or study rooms, e.g.; some questions specific to navigating the library's web site

Responses were sorted by topic within their respective cognitive development level groupings and recorded using Microsoft Excel.

Results

Responses were for the most part able to be easily coded using the established codebook. After the Procedural category was added to account for simplistic questions that offered no insight into students' cognitive characteristics, the other responses were fairly easy to sort.

Of 463 responses, 40 were invalid. Of the remaining 423 responses, 105, or 25%, fell in the Low range; 93, or 22%, were in the Medium range, 42 (10%) were High, 17 were Procedural (4%), and 166 (39%) were non-responsive -- i.e., they either contained no "left wondering" question, or they specifically stated that they had no question or that all their questions had been answered. With the Non-responsive data removed, the percentages are as follows: 42% Low, 35% Medium, 16% High, and 7% Procedural.

When question topics were taken into account, the highest number overall, with 34% of the responses, fell into the Research Process category. The second highest number (27%) were sorted into the Evaluating Sources category. Production of Information, Search, Structure/Navigation, Citing, and Access all had similar percentages of the responses, 1% were considered Miscellaneous, and the Format, Getting Help, and Off-Campus categories had no responses.

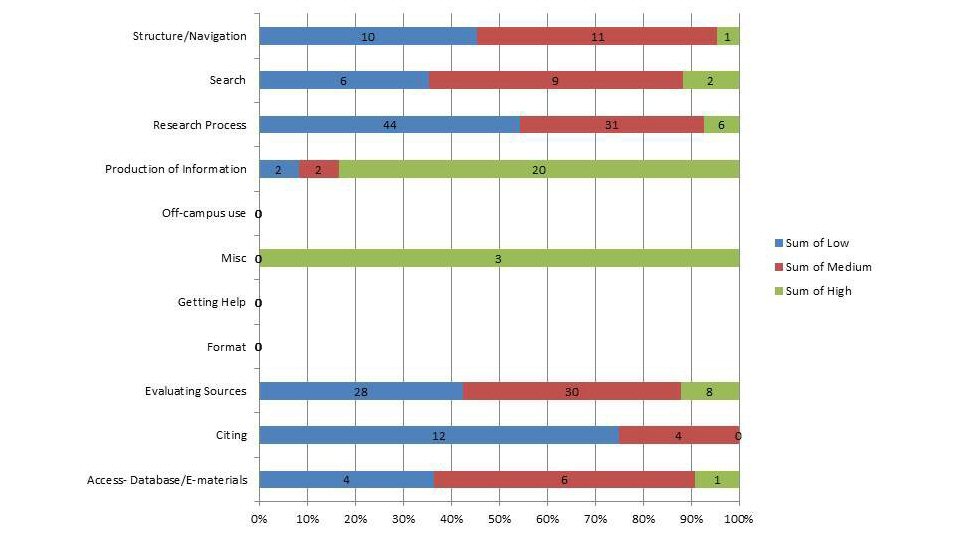

When topics were filtered by cognitive development level, several correlations emerged: questions about Research Process and Evaluating Sources, the two most popular categories, tended to be asked by students in the Low and Medium ranges. Production of Information questions, on the other hand, tended to come from students in the High group. In fact the High group, less than half the size of either the Low or Medium groups, posed mostly questions in this topic category; they were also the only group that asked Miscellaneous questions. Aside from these two exceptions, the High group's questions tended to mirror the general trend, as they were primarily about Research Process and Evaluating Sources (see fig. 1).

Figure 1 Cognitive Development Level % within Topics

Discussion

This study provides baseline data to characterize the intellectual development of midyear first-year students at this institution. Although the study produced some valuable data and the method appears to be sound, many questions remain. How should one interpret the "No Answer/No Question" non-responsive responses? Should the statement that the student has no questions be coded differently than a simple failure to write a response? And, is there a way to decrease the percentage of students who do not respond to this question?

In some cases, small differences in the way a question was worded differentiated how it was coded; for example, "what is the best way to find articles" would be categorized differently than "what is the correct way to find articles" according to the codebook. At what point does the paucity of verbiage cast doubt on the validity of the analysis?

Additionally, some "left wondering" questions were not easily categorized. Procedural questions such as "How do I connect to library resources via the campus VPN?" could be construed as low-level (because it is procedural), or medium to high level (because the student is engaging in forward-looking, predictive thought). In the end, categorizing these questions separately, thereby eliminating them from the analysis, was the clearest solution.

Both of these questions could be addressed by analyzing the data provided by students in response to the other minute paper question: "What is the most important thing you learned?" While this analysis was not attempted as part of the current study in an effort to keep research methods fairly simple and straightforward, it does seem worthwhile to consider as part of a follow-up study.

Matching question topics and cognitive development levels was not necessarily enlightening, and the correlations were predictable in several ways: The Production of Information category contained significantly more questions from High students than from any other cognitive development level. This was to be expected because this kind of question implies thinking beyond a simple dualistic world and putting oneself in the shoes of a researcher or author. Hence, this type of question is bound to reveal a more sophisticated intellect. Conversely, some question categories would be considered Low or Procedural by definition: the Getting Help and Off-Campus categories are good examples, as they tend to include "simple" questions about using the campus VPN or the hours of the Library reference desk. The high number of Evaluating Sources questions is to be expected, as this was the subject of the lesson and thus the topic was on the students' minds. Research Process is likely the most popular category of question because it encompasses a large range of queries related to conducting research, including how to get started, how to interpret and use sources, and how to develop effective habits as a researcher. Follow-up work in this area might include re-coding the questions into topic areas that are more useful in the context of intellectual development theory.

Conclusions

Clearly, there are limitations to this method of gauging student cognitive development: it cannot be as reliable as a determination made via a series of interviews or even a questionnaire such as that developed by Schommer. However, it does offer a "snapshot" of student intellectual development. While it is not recommended that every instruction librarian repeat all the details of the method described in this article, the practice of collecting free-form student feedback has proven to be very useful in several ways. Here are some immediate take-aways:

- Use checklists and ranking exercises with caution: during lesson planning, it was thought that engineering students would be more engaged in the activity if checklists and rankings were included, and it is very likely that this was true. However, these tools also led to the misperception that there exists a specific rankings number that is a "usability cut-off"; students in the "Low" group most likely were left with the impression that there is a black and white schema for determining whether sources are acceptable for use in their project.

- "Correct way"/"Better Way" questions present a learning opportunity: in research there is often not a "correct" way, and the "best" way may depend on the situation. As adults who are presumably long-removed from the lower cognitive positions, librarian instructors can benefit from a reminder of students' beliefs and mindset about the research process; by approaching information literacy instruction with this common student assumption in mind, librarians can be more effective instructors. For example, knowledge of the characteristics of the lower Perry positions was helpful when asked to participate in curriculum-writing for the university's Freshman Success seminar: the resulting lesson on Library Research Skills emphasized the ever-evolving nature of scientific research and the different formats in which such research is communicated to different audiences2.

- Identify Procedural and Other Common Questions: collecting student feedback over the course of several semesters allows librarians to become familiar with the most commonly asked questions. This information can be helpful in planning online tutorials, course help web pages, and prioritizing the content of lesson plans for future information literacy one-shots. One example specific to this institution involves the course textbook for the freshman writing class. The text is authored and edited by local faculty, and when asked to revise the chapter on Library Research, this author was able to tailor the content to address the most common questions at a level that would speak to the general cognitive positions of the student readers.

- Extend Learning: by collecting student feedback and returning written answers responding specifically to their questions, librarian instructors extended the learning experience and likely improved retention of lesson content. On the other hand, responding to student queries can be a lengthy and time-consuming process, even after accruing three semesters' worth of written answers. Since library instruction is often scheduled at the beginning of a research paper assignment, the librarian must return responses to student questions quickly if they are to be of any help. As public services librarians, we have been unable to leave questions unanswered; at least the process has become less time-consuming.

Future research in this area might include using this method to gauge cognitive development levels of first-year students at another institution in order to look for differences between engineering students and those who are studying other fields. It would also be worthwhile to conduct a longitudinal assessment on a select group of students at this institution to better understand how the development of cognitive positions and information literacy/research skills might be correlated. Finally, as a great deal of effort has been put into lessening the gender imbalance at this institution (mirroring the larger effort to get more women into STEM fields), studies involving gender-specific cognitive development characteristics would also be of interest.

Acknowledgements

I am grateful to all who offered advice and feedback as I underwent the stages of conducting this study and preparing it for publication: Patricia Andersen, Lisa Dunn, Jeanne Davidson and the audience members at the STS Research Forum 2015, and the ISTL editors and anonymous reviewers. I also express my thanks to the Spring 2015 EPICS students and the librarians who instructed them.

Notes

1 From an average of 3.27 as freshmen; only one quarter of subjects tested at position 5 or above as seniors (Pavelich and Moore 1996).

2 Two Frames from the ACRL Information Literacy Framework (then in draft form) formed a basis of the lesson: Scholarship as Conversation and Information Creation as a Process (Association of College and Research Libraries 2015).

References

Association of College and Research Libraries. 2000. Information Literacy Competency Standards for Higher Education. Chicago: Association of College & Research Libraries. [accessed 2014 Aug 1]. Available from http://www.ala.org/acrl/standards/informationliteracycompetency

Association of College and Research Libraries. 2015. Framework for Information Literacy for Higher Education. [accessed 2015 Jul 20]. Available from http://www.ala.org/acrl/standards/ilframework

Belenky, M.F., Clinchy, B.M., Goldberger, N.R., & Tarule, J.M. 1986. Women's Ways of Knowing: the Development of Self, Voice, and Mind. New York: Basic Books.

Colorado School of Mines. 2014. Profile of the Colorado School of Mines Graduate.

Fosmire, M. 2013. Cognitive development and reflective judgment in information literacy. Issues in Science & Technology Librarianship. DOI:10.5062/F4X63JWZ.

Gatten, N.J. 2004. Student psychosocial and cognitive development: theory to practice in academic libraries. Reference Services Review. 32(2), 157-163.

Jackson, R. 2008. Information literacy and its relationship to cognitive development and reflective judgment. New Directions in Teaching and Learning. 2000:47-61. [accessed 2014 Jan 3]. DOI: 10.1002/tl.316

King, P.M., and Kitchener, K.S. 1994. Developing Reflective Judgment: Understanding and Promoting Intellectual Growth and Critical Thinking in Adolescents and Adults. San Francisco: Jossey-Bass.

Marra, R.M., Palmer, B. & Litzinger, T.A. 2000. The effects of a first-year engineering design course on student intellectual development as measured by the Perry Scheme. Journal of Engineering Education. 89:39-45.

Olds B.M., Miller R.L. & Pavelich M.J. 2000. Measuring the intellectual development of students using intelligent assessment software. 30th Annual Frontiers in Education Conference: Building A Century of Progress. (IEEE Cat. No.00CH37135) 2.

Pavelich M.J. & Moore W.S. 1996. Measuring the effect of experiential education using the Perry model. Journal of Engineering Education. 85:287-292. [accessed 2014 May 2]. DOI:10.1002/j.2168-9830.1996.tb00247.x

Pavelich M.J., Olds, B.M. & Miller, R.L. 1995. Real-World problem solving in freshman-sophomore engineering. New Directions in Teaching and Learning. 61:45-54.

Perry, W.G. 1970. Forms of Intellectual and Ethical Development in the College Years: A Scheme. New York: Holt, Rinehart, and Winston.

Rapaport, W. 2013. William Perry's Scheme of Intellectual and Ethical Development. [accessed 2015 Jan 2]. http://www.cse.buffalo.edu/~rapaport/perry.positions.html

Schneider, K. 2012. What do graduating seniors say about skills, abilities, and learning experiences? Achieving Student Learning Outcomes. November. [accessed 2015 May 21]. Available from: {https://web.archive.org/web/20150416043749/http://inside.mines.edu/Assessment-Newsletters}.

Schneider, K. 2013. Comparison of Senior Survey results (March 2013) and Alumni Survey results (Fall 2012). Achieving Student Learning Outcomes (May), 2-5. [accessed 2015 May 21]. Available from: {https://web.archive.org/web/20150416043749/http://inside.mines.edu/Assessment-Newsletters}.

Schneider, K. 2014. Senior Survey Results. Golden, CO.

Schommer, M. 1994. Synthesizing epistemological belief research: Tentative understandings and provocative confusions. Educational Psychology Review 6:293-319. [accessed 2014 Jun 25]. DOI: 10.1007/BF02213418

Schommer, M. & Walker, K. 1995. Are epistemological beliefs similar across domains? Journal of Educational Psychology 87:424-432.

Appendix 1: Codebook

CODEBOOK

compiled by L. Vella, 12/2014

For rating student minute paper responses according to cognitive development level

Low (equivalent to "basic dualism" in Perry's scheme)

At the lower end of the scale, students ask questions or make statements that indicate a belief in black and white answers and authorities who are always correct. Student responses indicate that there must be a "right" answer for every problem or a correct way to search for the right answer, and the librarian/instructor's job is to show students how to do this. Vocabulary includes words such as "exact," "correct," "cut-and-dry," "always," "best," or "easiest." Examples of student responses:

- What is the minimum ranking in either category [scholarly, authoritative] that can be used as a valid source?

- Where exactly am I going to be able to find exact resources?

- I wonder if it is better to always use an article instead of a website

- Is there a cut and dry way to tell if a source is scholarly?

- What kinds of scholarly articles would be useful to my EPICS project?

- Is there a complete list of these [reputable journal/websites] sites… I assume so, but I want to be sure

- I'm curious on the best way to locate the trustworthy sources

- I'm still wondering where exactly the best place to find scholarly articles is. Internet? Library? Other?

Medium (equivalent to "full dualism" or "early multiplicity")

As they move higher in the cognitive development scale, students ask questions or make statements indicating an acceptance of disagreement between authorities; students still believe correct answers exist and want to know how determine which of the multiple answers are to be believed. Responses may include words such as "trust," "reputability," "conflicting." Examples of student responses:

- What is the most efficient way to find all of these scholarly articles?

- I am still worried about conflicting information and which to trust more, and I am wondering how to compare reputabilities.

- How can I better determine if an article or website is actually giving me information I want

- How to best search for a scholarly article

- I'm left wondering how we should judge the usefulness of a source—I have an idea, but I'm wondering if there are judging criteria like for judging scholarly or authoritative objects

- I learned that opinion pieces can be featured in reputable journals, so you can't just assume everything is fact

- Is there a search engine for just scientific or scholarly publishers?

- What if there are two scholarly sources with opposite information, but both articles are reputable?

High (equivalent to "late multiplicity")

Students presenting a higher stage of cognitive development are more likely to see solutions to problems in shades of gray rather than in black/white or good/bad terms. They are also more likely to ask questions that are philosophical or speculative in nature, demonstrating that their thought processes extend beyond a procedural view of the research process. Responses may include words such as "determine," differentiate," "judge." Examples of student responses:

- I am still left wondering about research techniques and how to find good articles and websites online

- How much time will I need to actually spend researching?

- How can I know who sponsors the article?

- How many websites have I used in research that weren't actually very reputable?

- One question I have is about non-published sources. How should we consider asking a professional weighted against published works in credibility?

- I am still left wondering on how to determine the reliability of a journal. It is perfectly possible for a journal to have a closed peer-review that supports a specific viewpoint, and I would like to know how to differentiate whether this could be the case.

- I'm wondering what some good subjects are related to architecture to find other articles

- Can articles be partially scholarly in the sense that one part of an article is extremely useful but the rest is inaccurate?

References

Jackson, R. 2008. Information literacy and its relationship to cognitive development and reflective judgment. New Directions for Teaching and Learning 2000(114): 47-61. doi:10.1002/tl.316

Perry, W.G. 1970. Forms of Intellectual and Ethical Development in the College Years: A Scheme. New York: Holt, Rinehart, and Winston, Inc.

Rapaport, W. 2013. William Perry's Scheme of Intellectual and Ethical Development. Retrieved January 02, 2015, from http://www.cse.buffalo.edu/~rapaport/perry.positions.html

Appendix 2: Minute Paper and Informed Consent Form

Minute Paper and Informed Consent Form (PDF)

| Previous | Contents | Next |

This work is licensed under a Creative Commons Attribution 4.0 International License.